I can now buy one 24-pack of White Monster per month with my SaaS MRR

I’ve now officially hit the MRR milestone where I can afford a 24-pack of White Monster every month. Huge. Another founder might celebrate revenue milestones with dashboards or CAC:LTV ratios, but I prefer real metrics and keeping it simple.

“What’s your strategy with this posting?”

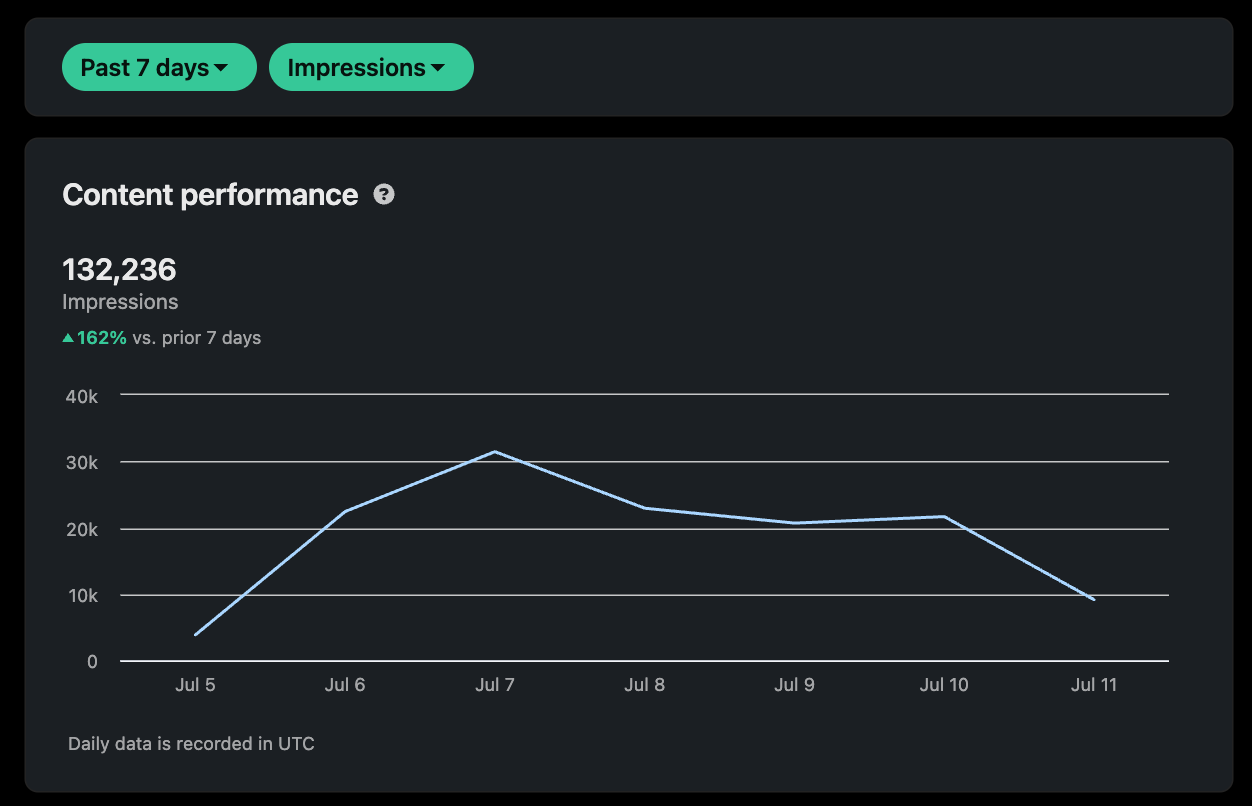

I get that question every other day in my DMs. Someone sees one of my low-engagement LinkedIn posts and probably thinks I have no clue what I’m doing. And yeah, some of them get like 12 likes and zero comments. But that’s not the point.

The point is that I post a lot, and enough of them hit. I’m still pulling in 100K+ views per week from LinkedIn just from having fun and shitposting while building. And some of those have converted into sales for Pitchkit.

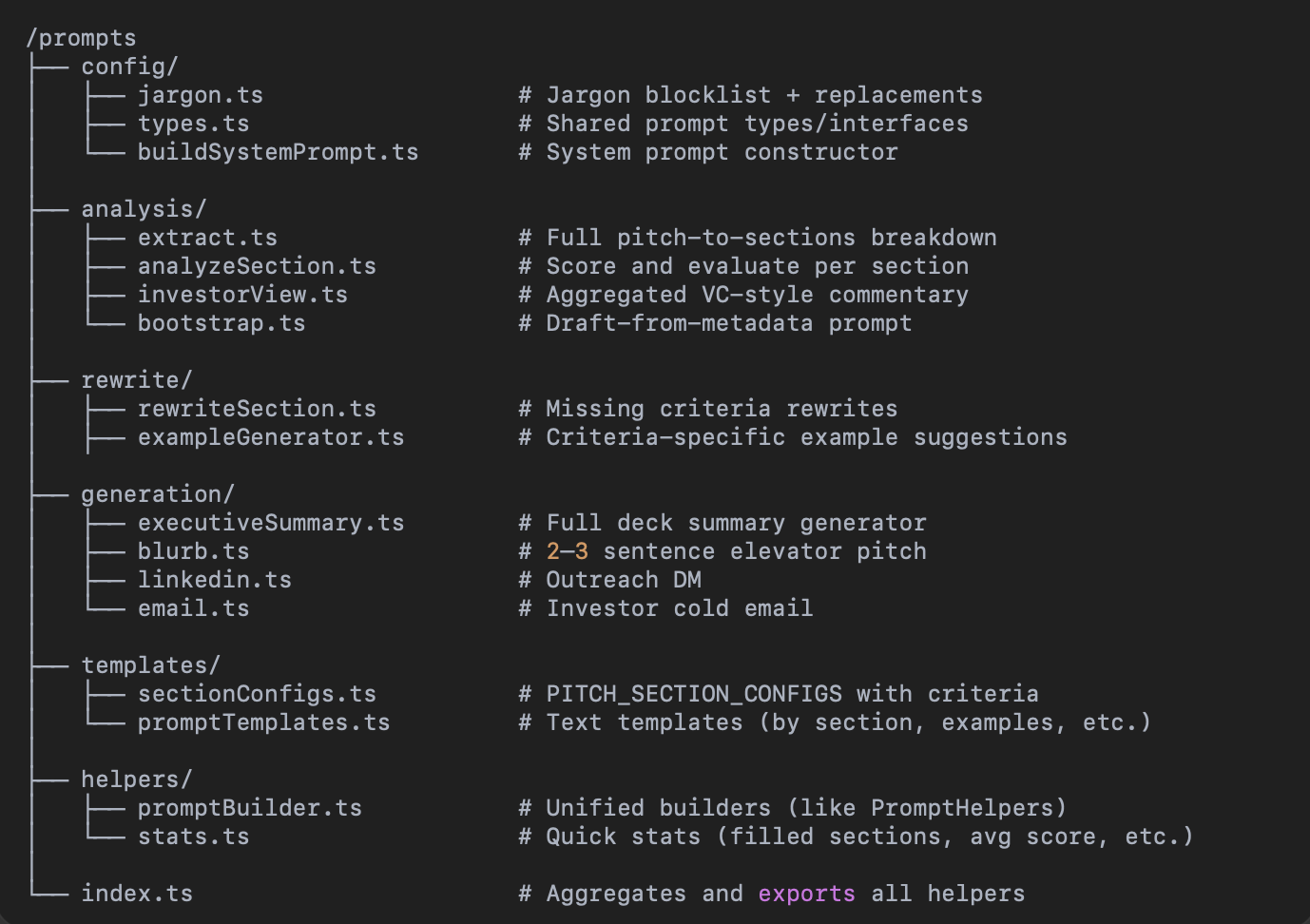

Rebuilding the Prompt Logic: From 1,000-line Beast to Modular System

Behind the scenes, I hit another milestone, which is refactoring the whole Pitchkit prompt logic.

When I first built the SaaS in 6 days, I had just one file called something like pitchPrompt.txt. It was fine when I had 3 sections. Then it turned into 1000 lines of spaghetti. Slow, clunky, unmaintainable.

So I took a step back and asked ChatGPT:

“What are the best practices right now for building structured prompt files for multi-function AI tools?”

Now I’ve modularized the whole thing like this:

- config/: shared logic, type safety, anti-jargon logic

- analysis/: score, evaluate, extract VC-style reviews

- rewrite/: rewrite missing pieces and generate examples

- generation/: output formats like summary, blurbs, LinkedIn, email

- templates/: section-level configs and text templates

- helpers/: prompt builder and stats (what’s missing, avg score)

- index.ts: aggregates everything neatly

Prompt engineering best practices I applied

So this time I didn’t just yolo-vibed it. I actually had a proper discussion with ChatGPT to walk me through the current state-of-the-art in prompt design for tools with multiple functions and outputs.

Here’s what stood out and what I applied:

- Modular > Monolith: Keep prompts in manageable chunks per function (summary, cold email, rewrite, etc.) so you can update them without breaking the whole system.

- Add metadata to everything: Each prompt file has context of funding stage, criteria, and role (e.g. “acting as a VC partner”). Makes the generation smarter and more context-aware.

- Separate configs and templates: Section configs define what “good” looks like. Templates generate specific outputs. Don’t mix these up.

- Inject evaluation logic: Added logic for things like red flags, weak traction signals, missing moat, vague USP. The system now scores sections before generating content.

- Use natural prompts, but strict structure: You can still prompt like a human, but keep the output JSON-structured for predictability.

- Don’t forget the rewrite layer: Sometimes a section doesn’t need full regeneration - just a rewrite to fit missing criteria. This layer saved a ton of time.

This setup makes future updates easier, improves consistency across pitch sections, and gets me to new features faster. Bonus: I’m now getting better investor-style output with more signal and less fluff.

No spam, no sharing to third party. Only you and me.